Getting real about artificial user research. A ChatGPT deep dive

That’s right, another article about how a UX Designer has incorporated ChatGPT into their workflow. But wait, this article won't simply list ‘10 reasons how you can use ChatGPT in the design process”. Instead, we'll briefly go under the hood to evaluate just how useful the information really is, and whether using ChatGPT could be a viable alternative to conducting user interviews.

To put ChatGPT to the test we came up with a mock brief to navigate the research phase of the design thinking process. We decided to explore the topic of Electric Vehicles (EVs), aiming to uncover the various benefits and pain points encountered by typical people in their search for an EV.

Our objective was to find out what ChatGPT can reliably be used for, and where it should be avoided. To do this we both questioned ChatGPT, and conducted our own customer interviews. We wanted to uncover whether or not the findings from ChatGPT were valid, and whether it could be used as a reliable source in our discovery process.

ChatGPT vs Customer Interviews

To start the project we needed to get more information on the EV industry; to understand pain points and any key brands operating in the space. To start our research we prompted ChatGPT with the following;

“What are some of the reasons why people don't switch to electric cars?”

The result identified key themes such as charging infrastructure, range anxiety, higher upfront costs and charging times. These results were all plausible and informative.

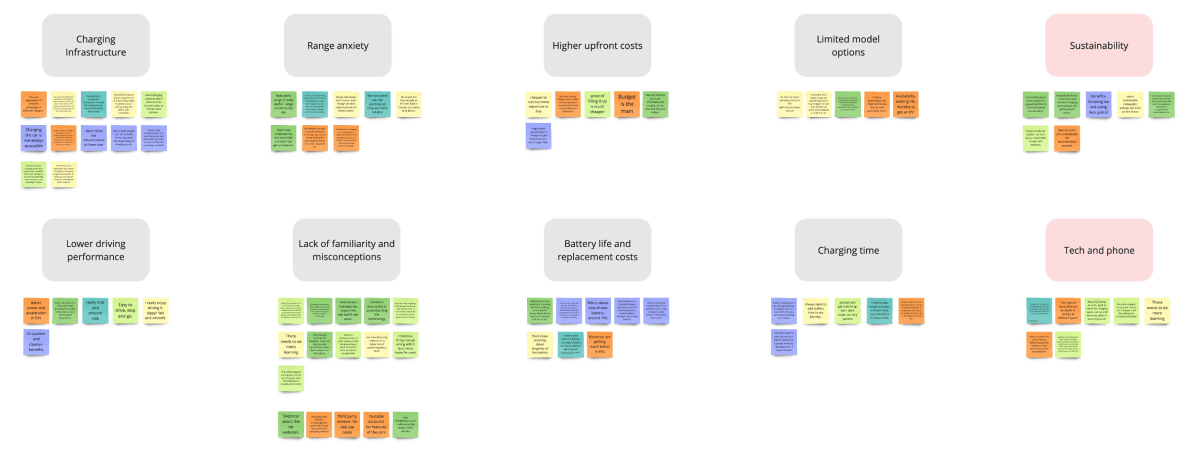

However, to validate the results to see if they correspond to what people were saying, we conducted six interviews with people who either owned an electric vehicle or were interested in purchasing one. We then affinity mapped the interview findings against the themes that ChatGPT had found. It was kind of like a closed card sort but using the ChatGPT themes as a starting point. The outcome was pretty cool…

Out of 10 themes that were uncovered, 8 were already outlined by ChatGPT (grey) and two were newly found from insights from the interviews (red). However, there were a few problems that began to arise when we lifted up the hood to see what the information said. A lot of the information that ChatGPT provided on the themes were very high-level and didn’t get into the details. This is where I believe interviews work much harder as you can get a more well-rounded understanding from different perspectives on a topic or experience.

Great for an overview, but struggles with the detail

ChatGPT is great for getting a broad understanding of a topic or an industry, one article referred to it as ‘the best desk research tool on the planet’. It breaks down information into very neat, bite-sized paragraphs that act as a perfect starting point for a research project, whether it’s understanding common pain points, or conducting competitor analysis . However as soon as we start substituting the user with ChatGPT in ‘user research’ that’s when issues arise, and scepticism should set in. The responses from ChatGPT often remain at a high-level, lacking the ability to uncover the ‘A-ha!’ nuances of individual user needs that interviews can provide.

Further stress-testing ChatGPT

To push our chatty assistant further, we tried some experiments. We wanted to see if it can mimic users from different locations, ages, lifestyles, and more. Does ChatGPT just give generic answers, or can it provide specific insights? We found out…

Insights from multiple countries: We asked it: “What do EV drivers with a family look for from their vehicle in the UK?” and “What do EV drivers with a family look for from their vehicle in Spain?”

Many themes were the same (seating capacity, interior space, safety features) but there were a few differences such as Spain having a warmer climate. It mentioned that customers are looking for ‘climate control’ and as the country is larger, and the ‘ability to do longer trips’ too. What it didn’t cover were things like the size of the market, car range availability, stock availability etc which very likely be different.

Insights from multiple locations in the UK: We asked it “What do EV drivers with a family look for from their vehicle in Taunton?” and “What do EV drivers with a family look for from their vehicle in London?” When testing with two different locations in the same country, ChatGPT again mentioned similar themes that shared common characteristics, such as safety features etc. However, there were specific comments on Taunton residents’ ‘Eco-friendly lifestyle’ and how ‘Taunton residents value sustainability and environmental consciousness’. It also mentioned London's Emission-free zones, specifically referencing the ULEZ and congestion charges. So whilst there are more general similarities between the two locations, ChatGPT can get into some location-specific detail.

Insights from a specific persona’s point of view e.g. gender, age range, lifestyle: We asked it; “What would a working 40 year old parent of two children, living in Manchester, be looking for in an electric vehicle?” and “What would a retired 70 year old, who enjoys swimming, living in Swansea, be looking for in an electric vehicle?” Many similar themes were suggested by ChatGPT, however, it did find some slight differences including ‘Space and Practicality for children’ vs ‘Comfort and Ease of Entry/Exit’ and ‘Family-friendly features’ vs ‘User-friendly technology’. It clearly can’t rill down into the details of what these audiences need, but it did help us to consider EVs from different points of view, in under a few minutes.

Insights on Brand perception: “What are people’s perceptions of Kia?” and “What are people’s perceptions of Volvo?” Kia and Volvo are pretty similar in terms of their offering and target audience so we wanted to see if ChatGPT could pull out any key differentiating aspects. It managed to call out Kia’s ‘7 year warranty’ which I then checked on the website and it was correct. Likewise, Volvo’s renowned ‘Scandinavian Design’ philosophy which again was mentioned on the company website. This was all impressive, however there was still a lot of ‘waffle’ and doesn’t get into detail such as giving specifics on technology and cost.

Insights for a niche topic: E.g. “What types of reporting capabilities would be helpful for EV salespeople?” Most of the insights that came through were around generic sales capabilities such as sales funnel analysis, sales performance reports, lead generation reports. The only EV-specific report that was referenced was inventory management reports - which follows along the theme of ChatGPT providing a generalised overview but struggling to get specific on a more niche industry.

Provide Quantitative data: E.g. “What is the percentage of EV drivers in the UK compared to the US? Break down the data.” At first glance ChatGPT can provide quantitative data and stats on the size of the EV market. However, ChatGPT can’t access any data since 2021 so the information is out-of-date. The results also go on to say that ‘the data provided is a general overview and may not reflect the latest figures. The results do cite the sources of the information, including the Society of Motor Manufacturers and Traders (SMMT) and the Edison Electric Institute. The problem with this is that it’s difficult to then go back and check the source as there is no link or provenance to the source.

ChatGPT for Usability testing: E.g. “What are some common pain points on the Kia website?” ChatGPT is a generative AI tool and therefore can’t provide any real-time data on the Kia website. It can’t be used to search the internet, in this case the Kia website. Instead, ChatGPT ‘can provide you with some common pain points that users may experience on websites in general’. The results are ok, but nothing surprising, and again follow the theme of ChatGPT being great for giving an overview of generic points but it really can’t be a substitute for usability testing a website.

Reliability of sources

Some of our initial questions on ChatGPT were, ‘Where is the information coming from?’ and 'How do we know that the information we’re getting is accurate?’. We know that ChatGPT is a large language model (LLM) meaning that it is trained on a vast amount of data and selects words based on the probability that they are likely to occur.

This means that we don’t know the true source of the information as it’s being generated from multiple sources. Despite it being extremely convincing, we can’t guarantee the accuracy of the information provided. For instance, in the experiment of comparing interview findings with ChatGPT, one aspect that was disproved by the interviews was the claim that electric vehicles (EVs) have inferior acceleration or driving performance compared to traditional gas-powered cars. This contradicted the experiences shared by several users, who claimed that the acceleration and driving performance of EVs were, in fact, significantly better. This result is by no means a one off, Michael Osborne, Professor of Machine Learning at Oxford, said that “LLMs have limited reliability, limited understanding, limited range, and hence need human supervision”.

So that begs the question, should we be using information provided by ChatGPT in our work if we can’t be assured the results or sources are valid? Bard, Google’s AI, could be considered as more reliable considering it does in fact provide you with sources, although the authenticity is questionable considering the result that came up on a recent search.

I clicked on the link to validate the source which led me to a 404 page saying the site didn’t exist. Reliable? I’m not so sure.

We tested the provenance of the results by asking ChatGPT for its sources;

Its answer… “As an AI language model, my responses are generated based on a mixture of licensed data, data created by human trainers, and publicly available data. I have not been directly trained on specific sources or have access to proprietary databases. I should note that while I strive to provide accurate and up-to-date information, my responses may not always reflect the most current news or developments in specific industries. The information I provide should be used for informational purposes only and should not be considered a substitute for professional advice or specific sources.”

Regarding our hypothesis, we do not believe that the current results generated by ChatGPT can serve as a substitute for user interviews as a reliable source of truth. Much like someone at the pub can be extremely convincing in explaining why you should get into Cryptocurrency, sometimes it’s best to move on and say, 'Have a good night mate'.

The reliability of ChatGPT has been called into question in the media, with the case of US lawyer Steven Schwartz, who sought legal research from ChatGPT. Despite Schwartz’s repeated inquiries to verify the sources and validity of the cases provided by ChatGPT, he received assurances that the sources were real and could be found on reputable legal databases like LexisNexis and Westlaw. However, it turned out that the cases were entirely fictitious, Schwartz is now facing a legal case of his own after the legal cases served by ChatGPT to Schwartz didn’t even exist.

Oh, and that ‘Regenerate answers’ button

We were very concerned that it was possible to ‘regenerate answers’ so we tested it out. When regenerating a response, the main difference is the terminology and language used as opposed to contradicting the overall content themes. An example of this is changing ‘versatility’ to ‘convenience’. Essentially, it says the same thing in a different way. This doesn’t mean it’s wrong, it just makes it inconsistent from a copy perspective.

ChatGPT is great for doing the heavy lifting in writing tasks

With this being said, ChatGPT can be extremely useful in the admin side of research, for example writing test scripts, writing recruitment emails or writing screener surveys. We used ChatGPT to write a test script for our user interviews, one example question was;

"What do you perceive as the main benefits of electric cars? For example, do you see environmental impact, cost savings, or performance as significant advantages?"

At first it looks pretty good, it asks about the benefits of EVs which we know is a good place to start. The problem is that the second half is very leading, it lists some examples the user could talk about. If we had used this it would have resulted in biassed results as it would prompt users on their experiences. A simple change here would be to manually take out the 'e.g.' and then keep the first part of the question.

ChatGPT is again, a great place to start speeding up content-heavy tasks. However it still requires the due-diligence to go in and evaluate the quality of the content that is being provided.

Conclusion

On the whole, ChatGPT is awesome..for certain things. At the start of any UX project I highly recommend using Chatty (we’re friends now) to learn more about whatever industry the project is in, to identify any competitors, to write user interview scripts or even to create email templates.

I would be hesitant to allow ChatGPT to help you cut corners in understanding user needs or to create persona’s as we can’t be sure of where the information is coming from. Traditional UX research methods such as interviews uncover the nuances of daily life that help you get to the heart of what people want and the problems that they face.

I’m confident that humans' ability to empathise, to understand and to communicate is far more powerful than an AI, at least for now…

ABOUT THE AUTHOR

NICO WIGGIN, UX DESIGNER

Nico joined Bernadette at the start of May after 5 years of agency, start-up, and client-side experience. He began his career in account management in an OOH media agency before turning to UX shortly after the pandemic due to his passion for digital products.

This is part of what we're calling iTest - an ongoing content series by real people in Bernadette, documenting experiments and discoveries in the world of AI - focusing on how humans and machines can collaborate, co-create and co-exist in harmony.

Bernadette is proud cohorts with faith - the ai creative agency from VCCP. We have faith that AI, used responsibly, will be an unparalleled accelerator of human creativity.

Let's talk

Got a business challenge that’s looking for an innovative digital solution? Or, perhaps you’re interested in joining our collective of digital pioneers? Maybe you just want to know a little more about what we do. In any case, we’d love to hear from you.