Access granted: How AI Is already making digital accessibility smarter

And why it’s not just generative — it’s agentic.

When AI and accessibility appear in the same sentence, the conversation often jumps to automation.

Can AI write alt text? Adjust contrast? Fix code? Increasingly, yes. But at Bernadette, we believe the real opportunity for accessible design goes far beyond quick fixes and accelerating workflows.

While it’s true that generative AI is already helping us automate and augment traditional accessibility standards, we’re also looking ahead — to a digital future shaped by sophisticated user-facing AI agents and action models powered by autonomous, decision-making systems ready to act on the behalf of users and adapt to their needs in real time.

That’s why we’ve already begun to prototype what we call ‘artificially mindful interfaces’ — context-aware features that pre-empt barriers to respond with greater nuance, personalisation and immediacy.

And, as we continue to scope the potential this next generation of assistive technologies is set to deliver, we’re staking out creative opportunities — not just for the accessible web, but for the world beyond the screen. But before we get ahead of ourselves, let’s take a look at how AI is reshaping accessibility right now — and how brands can start thinking more ambitiously about AI-driven inclusive design, from the ground up.

How AI is rewriting the accessibility playbook

While the commentary around AI often feels future-focused, many meaningful shifts are already happening — quietly, practically, and behind the scenes.

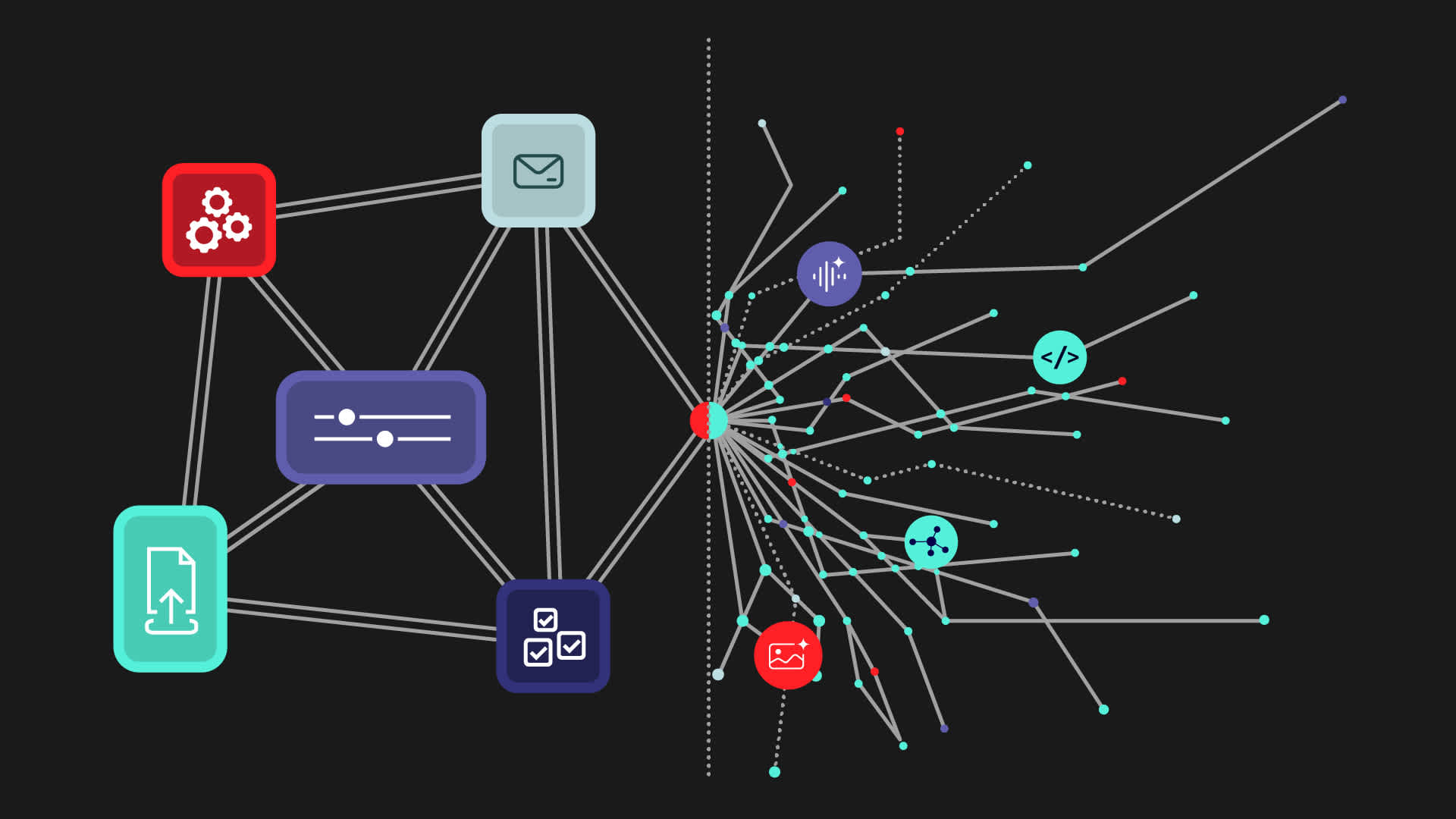

At Bernadette, we’re already integrating AI into the everyday tools and workflows that shape accessible digital experiences. From real-time semantic markup validation to smart alt-tag suggestions, we’re using generative systems to identify gaps, flag risks, and guide better defaults — all without slowing down the pace of design.

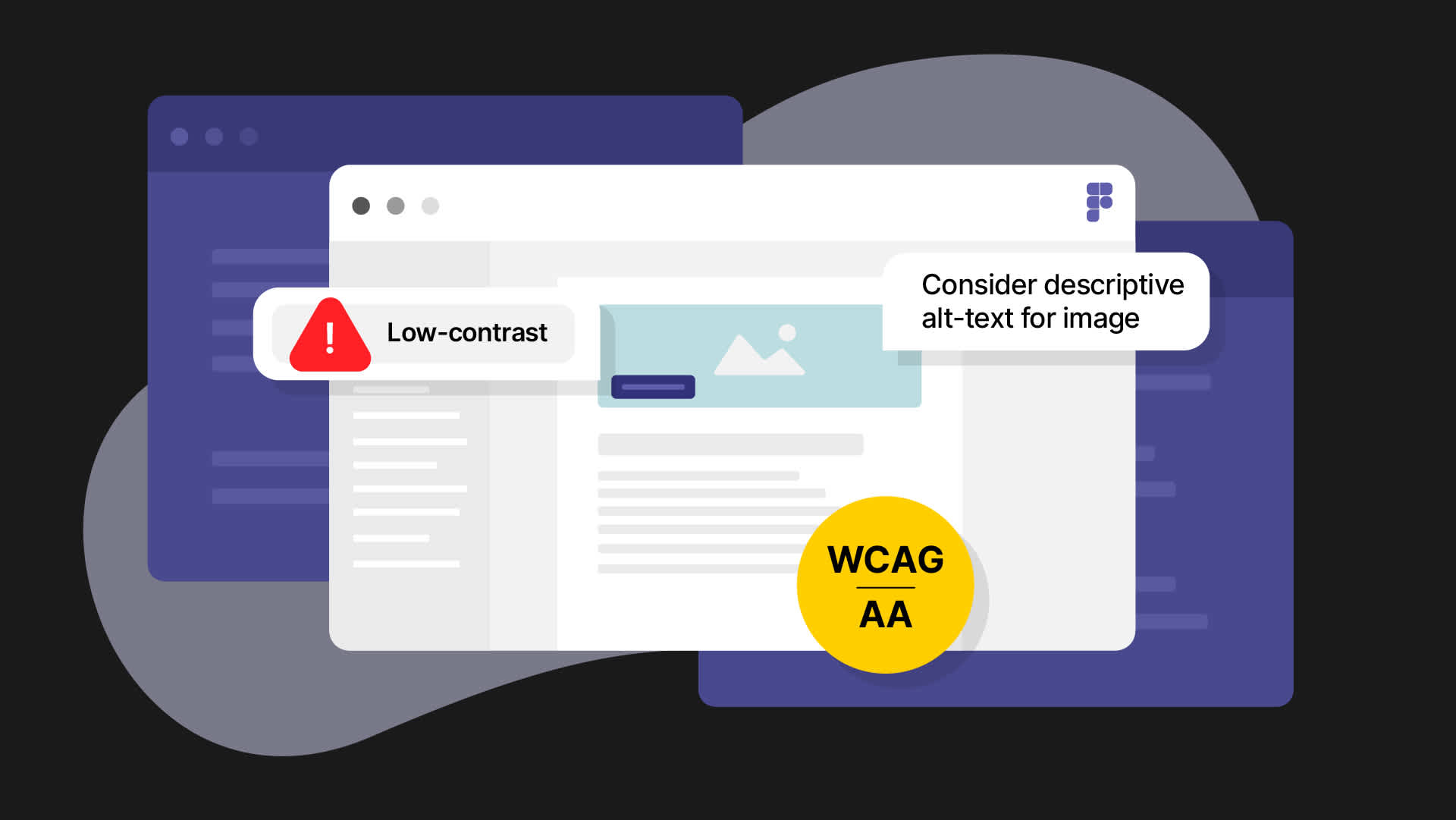

AI-powered plugins now sit inside our design environments — helping us spot contrast issues in real time, generate accessible components, and pre-empt code-level WCAG violations before they reach QA. This initiative extends to the use of a customised prompt library designed to boost accessibility essentials such as screen-reader compatibility and keyboard navigation — ensuring inclusive interaction from the very first design draft.

All of this is scalable from project to project which allows us to maintain a consistent level of rigour whatever we’re working on. Whether we’re supporting a full platform overhaul or a lightweight microsite, these tools help us align with evolving benchmarks like WCAG 2.2 and prepare for new regulatory shifts like the European Accessibility Act (EAA) — without starting from scratch every time.

The result? Faster prototyping. Fewer reworks. And a stronger foundation for inclusive design that adapts as legislation and user needs continue to change.

How AI is empowering users directly

But perhaps the real breakthroughs aren’t in how AI is helping creatives meet industry standards — but rather how it’s already reshaping experiences and improving lives on the other side of the screen. Thanks to the adaptable nature of these generative tools, developers are finding new ways to meet the longstanding accessibility needs of specific user groups. This has led to a seismic shift toward digital environments and applications that are universally easier to navigate, especially for those historically overlooked by traditional systems.

Neurodivergent users, for example, are now able to access written content that rewrites itself on demand — adjusting tone and structure in real time and breaking down dense material into focused, digestible summaries if the system detects a dip in concentration.

In fact, when it comes to accessible language, our own work on Kia’s Virtual Assistant set a new benchmark for real-time web messaging in the automotive sector. In collaboration with a third party, we developed a custom-trained large language model (LLM) calibrated to Kia’s tone of voice — with a cadence, clarity, and conversational logic designed to support inclusion and readability from the ground up. The assistant responded with clarity, followed up on incomplete queries, and adapted to a wide range of user needs — making every exchange more accessible, intuitive, and human.

For those with more significant sensory impairments — where both reading and interpreting visual context present challenges — we’re now seeing even more powerful AI-driven tools emerging to meet the moment. A standout example is Be My AI: a GPT‑4 assistant embedded in the Be My Eyes app. Designed for blind and low-vision users, it goes far beyond simply describing images. It understands their context, unpacks cluttered layouts, answers follow-up questions, and — in a sign of where AI is headed — even suggests next steps, like recommending recipes based on what’s in your fridge.

Likewise, for deaf people, the UK startup Silence Speaks is closing the gap in BSL interpretation — with an AI avatar translates text, speech, and video into British Sign Language with remarkable fluency, trained not just on vocabulary, but on regional dialects, emotional tone, and real-world signing speed.

These examples show how AI is already transforming what’s possible on screen — but beyond the browser, it’s also helping build the connective tissue between people and their environments. From powering prosthetic devices that adapt to movement patterns, to creating contextual navigation tools for low-vision users, to voice-controlled smart home systems that respond to non-verbal cues or gestures, the reach of AI is expanding rapidly.

These solutions point to a broader definition of tech-assisted accessibility — and it’s this breadth of application, permeating the physical world, our homes, public spaces, and even our own bodies, that looks set to define the future of accessible design — and that’s already giving us some big ideas.

The shift to truly adaptive design

As impressive as today’s tools might seem, they’re only the beginning.

We’re anticipating the next evolution in AI — pre-emptive systems that won’t just generate content or audit interfaces on request, but will operate proactively on behalf of the user. This is the emerging realm of agentic AI: intelligent systems that interpret context, anticipate needs, and take meaningful action autonomously.

Literally, these agents are learning to read the room — as well as the screen — and respond accordingly based on context and reasoning. And for accessibility, that’s a game-changer.

Imagine an interface that doesn’t just flag a contrast issue — it corrects it automatically, based on ambient light or time of day. A reading assistant that senses when your attention dips and rewrites a dense paragraph into something shorter, clearer, more digestible. Or a navigation tool that doesn’t just react to your inputs — it understands your habits, your stress signals, even your routine, and guides you accordingly.

And it’s not just interfaces that are set to evolve — even typography is on the cusp of becoming responsive. In its 2025 Re:Vision trends report, Monotype — the global type giant behind Helvetica — explores how AI could soon drive ‘reactive type systems’ that adapt to emotional and environmental cues. Fonts might sharpen when you’re focused, soften when your gaze drifts, shift contrast based on light levels, or adjust emphasis depending on reading speed and comprehension. It’s a speculative but powerful vision: text that flexes in real time to meet you where you are — cognitively, visually, emotionally. As with all agentic design, the end goal isn’t just automation — it’s deeper connection through intelligent, human-aware interaction.

All of this points to a future of accessible design that’s increasingly about building systems that adapt to people — emotionally, cognitively, physically — rather than asking people to adapt to the system.

At Bernadette, agentic AI is an emerging concept that we’re watching closely. We’re not claiming to have all the answers — yet. But by embedding curiosity, ethics, and experimentation into our design process, we’re already laying the groundwork for a future where smarter tech is bringing new digital experiences to life — for everyone.

What this means for Bernadette clients

Whether we’re launching immersive campaigns like My Cadbury Era, powering platforms through O2’s Oxygen design system, or deploying Daisy — a fraud-fighting AI “granny” who chats with scammers to protect vulnerable users — we apply the same AI-enhanced accessibility standards across every brand and deliverable, with each and every output benefiting from accessible component libraries by default.

But for clients working with Bernadette, the opportunity isn’t just to meet today’s standards but to help shape tomorrow’s by exploring the impact on people and brands. In this way, we’re looking to unlock the creative potential of AI-driven inclusive design and emotionally intelligent experiences, together.

Need help with digital accessibility?